MegaPov version 0.6

This document contains information about MegaPov features that are not part of the official distribution of POV-Ray 3.1. For documentation on the official features, please refer to the official POV-Ray documentation, which can be obtained from www.povray.org.

MegaPov is a cooperative work by members of the POV-Ray community. This is an unofficial version of POV-Ray. Do not ask the POV-Team for help with this. If you need help with a specific patch, contact the author (patch authors are listed in this document, their email address and url are listed in the last chapter). You can get the official version of POV-Ray 3.1 from www.povray.org.

MegaPov features are enabled by using:

#version unofficial MegaPov 0.4; // version number may be different

The new features are disabled by default, and only the official version's syntax will be accepted. The above line of POV code must be included in every include file as well, not just the main POV file.

Once the unofficial features have been enabled, they can be again disabled by using:

#version official 3.1;

This is useful to allow backwards compatibility for the "normal bugfix" and layered textures. Remember, though, that by the normal bugfix and the layered textures bugfix are now disabled by default.

Backwards compatibility is not available for radiosity.

Changing the unofficial version number has no affect on the official language version number. You can also retrieve the unofficial version number using unofficial_version. The unofficial_version variable returns -1 if unofficial features are disabled. Here's an example:

#version 3.1; #version unofficial MegaPov 0.4; #declare a= version; #declare b= unofficial_version;

Here, a would contain the value 3.1, while b would contain the value 0.4.

Author: Mike Hough

syntax:

camera {

sphere or spherical_camera

h_angle FLOAT

v_angle FLOAT

}

The spherical_camera renders spherical views.

The h_angle is the horizontal angle (default 360) and the v_angle is vertical (default 180). Pretty easy.

If you render an image with a 2:1 aspect ratio and map it to a sphere using spherical mapping, it will recreate the scene! Another use is to map it onto an object and if you specify transformations for the object before the texture, say in an animation, it will look like reflections of the environment (sometime called environment mapping for the scanline impaired).

Author: Eric Brown

Bugfixes: Jérôme Grimbert

The circular tag has been added to area lights in order to better create circular soft shadows. Ordinary area lights are rectangular and thus project partly rectangular shadows around all objects, including circular objects. By including the circular tag in an area light, the light is stretched and squashed so that it looks like a circle.

Some things to remember:

Circular lights can be ellipses, just give unequal vectors in the scene definition

Rectangular artifacts only show up with large area grids

There is no need to use circular with linear lights or lights which are 2 by 2

The area of a circular light will be 78.5% of a similar size rectangular light (problem of squaring the circle) so increase your vectors accordingly

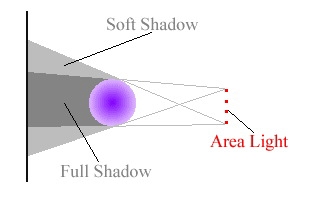

The orient tag has been added to area lights in order to better create soft shadows. Ordinary area lights are 2D (normal lights are 1D); however, if you improperly give the axises to an area light, it can act like a 1D light. By including the orient tag in an area light, the light is oriented so that it acts like a 3D light

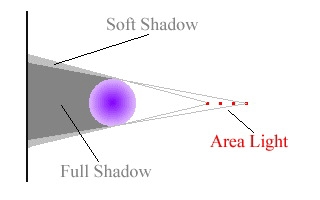

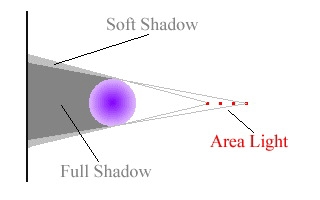

Below shows the problem with normal area lights. The axes determine the plane in which the lights are situated. However, this plane can be incorrect for some objects. As such, the soft shadow can be squashed.

The orient tag fixes this by making sure that the plane of the area light is always perpendicular to the point which is being tested for a shadow (see below).

Author: Matthew Corey Brown

syntax

light_source{

...

groups "name1,name2,name3,..."

}

object {

...

light_group "name1"

no_shadow "name2"

}

media {

...

light_group "!name3"

}

defaults:

light_group "all" no_shadow "none"

This is to control light interaction of media and objects. There can be a max of 30 user defined Light groups, none and all are pre defined. Groups can be called anything but no spaces and they are delimited by commas with the groups key word. All lights are automatically in the "all" group.

If light_group or no_shadow group contains a ! it is interpreted to mean all lights not in the group that follows the !.

If a light doesn't interact with a media or object then no shadows are cast from that object for that light.

Author: Ronald L. Parker

syntax:

light_source {

...

parallel

point_at VECTOR

}

Parallel lights shoot rays from the closest point on a plane to the object intersection point. The plane is determined by a perpendicular defined by the light location and the point_at vector.

For normal point lights, point_at must come after parallel.

fade_distance and fade_power use the light location to determine distance for light attenuation.

This will work with all other kinds of light sources, spot, cylinder, point lights, and even area lights.

Author: Nathan Kopp

My latest fun addition to POV is the photon map. The basic goal of this implementation of the photon map is to render true reflective and refractive caustics. The photon map was first introduced by Henrik Wann Jensen <http://www.gk.dtu.dk/home/hwj/>

Photon mapping is a technique which uses a backwards ray-tracing pre-processing step to render refractive and reflective caustics realistically. This means that mirrors can reflect light rays and lenses can focus light.

Photon mapping works by shooting packets of light (photons) from light sources into the scene. The photons are directed towards specific objects. When a photon hits an object after passing through (or bouncing off of) the target object, the ray intersection is stored in memory. This data is later used to estimate the amount of light contributed by reflective and refractive caustics.

I wrote a paper about this for my directed study. The paper is called Simulating Reflective and Refractive Caustics in POV-Ray Using a Photon Map, and you can download it in zipped postscript format at http://nathan.kopp.com/nk_photons.zip (size: about 800 KB).

Current Limitations

|

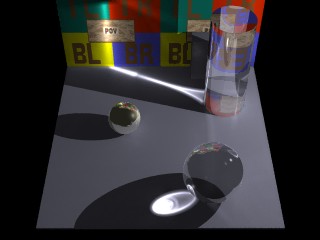

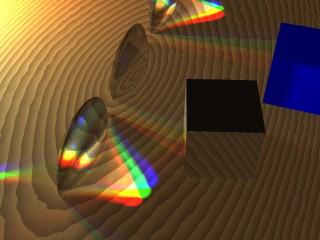

This image shows reflective caustics from a sphere and a cylinder. Both use an index of refraction of 1.2. Also visible is a small amount of reflective caustics from the metal sphere, and also from the clear cylinder and sphere. |

|

Here we have three lenses and three light sources. The middle lens has photon mapping turned off. You can also see some reflective caustics from the brass box (some light reflects and hits the blue box, other light bounces through the nearest lens and is focused in the lower left corner of the image). |

To use photon mapping in your scene, you need to provide POV with two pieces of information. First, you need to specify details about photon gathering and storage. Second, you need to specify which objects receive photons, which lights shoot photons, and how close the photons should be spaced.

To specify photon gathering and storage options you need to add a photons block to the global_settings section of your scene.

Here’s an example:

global_settings{

photons{

count 20000

autostop 0

jitter .4

}

}

global_photon_block:

photons {

spacing <photon_spacing> | count <photons_to_shoot>

[gather <min_gather>, <max_gather>]

[global <global_photons_to_shoot>]

[media <max_steps> [,<factor>]]

[reflection_blur on|off]

[jitter <jitter_amount>]

[max_trace_level <photon_trace_level>]

[adc_bailout <photon_adc_bailout>]

[save_file "filename" | load_file "filename"]

[autostop <autostop_fraction>]

[expand_thresholds <percent_increase>, <expand_min>]

[radius <gather_radius>]

[steps <num_gather_steps>]

}

The number of photons generated can be set using either the spacing or count keywords:

The keyword gather allows you to specify how many photons are gathered at each point during the regular rendering step. The first number (default 20) is the minimum number to gather, while the second number (default 100) is the maximum number to gather. These are good values and you should only use different ones if you know what you’re doing. See the advanced options section for more information.

The keyword global turns on global photons and allows you to specify a count for the number of global photons to shoot.

The keyword media turns on media photons. The parameter max_steps specifies the maximum number of photons to deposit over an interval. The optional parameter factor specifies the difference in media spacing compared to surface spacing. You can increase factor and decrease max_steps if too many photons are being deposited in media.

The keyword reflection_blur allows you to specify whether photons pay attention to reflection blur or not. Usually, blurry caustics can be achieved easily by using fewer photons in the photon map, reducing the need to use true reflection blurring when creating the photon map. Turning on reflection_blur will increase the number of photons if any surfaces use reflection_blur with multiple samples.

The keyword jitter specifies the amount of jitter used in the sampling of light rays in the pre-processing step. The default value is good and usually does not need to be changed.

The keywords max_trace_level and adc_bailout allow you to specify these attributes for the photon-tracing step. If you do not specify these, the values for the primary ray-tracing step will be used.

The keywords save_file and load_file allow you to save and load photon maps. If you load a photon map, no photons will be shot. The photon map file contains all surface (caustic), global, and media photons.

The keyword radius allows you to specify the initial radius used to gather photons. It is suggested that you only specify this if necessary. If you do not specify a gather radius, it will be computed automatically by statistically analyzing the photon map. In some cases you may have to specify it manually, however (which is why the option remains available).

The keywords autostop, steps, and expand_thresholds will be explained later.

To shoot photons at an object, you need to tell POV that the object receives photons. To do this, create a photons { } block within the object. Here is an example:

object{

MyObject

photons {

target

refraction on

reflection on

ignore_photons

}

}

object_photon_block:

photons{

[target [<spacing_multiplier>]]

[refraction on|off]

[reflection on|off]

[ignore_photons]

[pass_through]

}

In this example, the object both reflects and refracts photons. Either of these options could be turned off (by specifying reflection off, for example). By using this, you can have an object with a reflective finish which does not reflect photons for speed and memory reasons.

The keyword target makes this object a target.

The density of the photons can be adjusted by specifying the spacing_multiplier. If, for example, you specify a spacing_multiplier of 0.5, then the spacing for photons hitting this object will be 1/2 of the distance of the spacing for other objects.

Note that this means four times as many surface photons, and eight times as many media photons.

The keyword ignore_photons causes the object to ignore photons. Photons are neither deposited nor gathered on that object.

The keyword pass_through causes photons to pass through the object unaffected on their way to a target object. Once a photon hits the target object, it will ignore the pass_through flag. This is basically a photon version of the no_shadow keyword, with the exception that media within the object will still be affected by the photons (unless that media specifies ignore_photons). New in version 0.5: If you use the no_shadow keyword, the object will be tagged as pass_through automatically. You can then turn off pass_through if necessary by simply using "photons{pass_through off}".

Note: Photons will not be shot at an object unless you specify the target keyword. Simply turning refraction on will not suffice.

light_source{

MyLight

photons {

global

refraction on

reflection on

}

}

light_photon_block :==

photons{

[global [<spacing_multiplier>]]

[refraction on|off]

[reflection on|off]

[area_light]

}

Sometimes, you want photons to be shot from one light source and not another. In that case, you can turn photons on for an object, but specify "photons {reflection off refraction off}" in the light source’s definition. You can also turn off only reflection or only refraction for any light source.

Light sources can also generate global photons. To enable this feature, put the keyword global in the light source's photon block. The keyword global can be followed by an optional spacing_multiplier float value which adjusts the spacing for global photons emitted from that light source. If, for example, you specify a spacing_multiplier of 0.5, then the spacing for global photons emitted by this light will be 1/2 of the distance of the spacing for other objects. Note that this means four times as many surface photons, and eight times as many media photons (there are currently no global media photons, but eventually that feature will be enabled).

global_settings{

photons {

count 10000

media 100

}

}

Photons also interact fully with media. This means that volumetric photons are stored in scattering media. This is enabled by using the keyword media within the photons block.

To store photons in media, POV deposits photons as it steps through the media during the photon-tracing phase of the render. It will deposit these photons as it traces caustic photons, so the number of media photons is dependent on the number of caustic photons. As a light ray passes through a section of media, the photons are deposited, separated by approximately the same distance that separates surface photons.

You can specify a factor as a second optional parameter to the media keyword. If, for example, factor is set to 2.0, then photons will be spaced twice as far apart as they would otherwise have been spaced.

Sometimes, however, if a section of media is very large, using these settings could create a large number of photons very fast and overload memory. Therefore, following the media keyword, you must specify the maximum number of photons that are deposited for each ray that travels through each section of media. A setting of 100 will probably work for most

scenes.

You can put ignore_photons into media to make that media ignore photons. Photons will neither be deposited nor gathered in a media that is ignoring them. Photons will also not be gathered nor deposited in non-scattering media. However, if multiple medias exist in the same space, and at least one does not ignore photons and is scattering, then photons will be deposited in that interval and will be gathered for use with all media in that interval.

I made an object with IOR 1.0 and the shadows look weird. Why?

If the borders of your shadows look odd when using photon mapping, don’t be alarmed. This is an unfortunate side-effect of the method. If you increase the density of photons (by decreasing spacing and gather radius) you will notice the problem diminish. I suggest not using photons if your object does not cause much refraction (such as with a window pane or other flat piece of glass or any objects with an IOR very close to 1.0).

My scene takes forever to render. Why?

When POV-Ray builds the photon maps, it continually displays in the status bar the number of photons that have been shot. Is POV-Ray stuck in this step and does it keep shooting lots and lots of photons?

yes

If you are shooting photons at an infinite object (like a plane), then you should expect this. Either be patient or do not shoot photons at infinite objects.

Are you shooting objects at a CSG difference? Sometimes POV-Ray does a bad job creating bounding boxes for these objects. And since photons are shot at the bounding box, you could get bad results. Try manually bounding the object. You can also try the autostop feature (try "autostop 0"). See the docs for more info on autostop.

no

Does your scene have lots of glass (or other clear objects)? Glass is slow and you need to be patient.

My scene has polka dots but renders really quickly. Why?

You should increase the number of photons (or decrease the spacing).

The photons in my scene show up only as small, bright dots. How can I fix this?

The automatic calculation of the gather radius is probably not working correctly, most likely because there are many photons not visible in your scene which are affecting the statistical analysis.

You can fix this by either reducing the number of photons that are in your scene but not visible to the camera (which confuse the auto-computation), or by specifying the initial gather radius manually by using the keyword radius. If you must manually specify a gather radius, it is usually best to also use spacing instead of count, and then set radius and spacing to a 5:1 (radius:spacing) ratio.

Adding photons slowed down my scene a lot, and I see polka dots. Why?

This is usually caused by having both high- and low- density photons in the same scene. The low density ones cause polka dots, while the high density ones slow down the scene. It is usually best if the all photons are on the same order of magnitude for spacing and brightness. Be careful if you are shooting photons objects close to and far from a light source. There is an optional parameter to the target keyword which allows you to adjust the spacing of photons at the target object. You may need to adjust this factor for objects very close to or surrounding the light source.

I added photons, but I don’t see any caustics. Why?

When POV-Ray builds the photon maps, it continually displays in the status bar the number of photons that have been shot. Did it show any photons being shot?

no

If your object has a hole in the middle, do not use the autostop feature (or be very careful with it).

If your object does not have a hole in the middle, you might also try avoiding autostop, or you might want to bound your object manually. As of MegaPov 0.5, you should be able to use "autostop 0" even with objects that have holes in the middle.

Try increasing the number of photons (or decreasing the spacing).

yes

Where any photons stored (the number after "total" in the rendering message as POV shoots photons)?

no

It is possible that the photons are not hitting the target object (because another object is between the light source and the other object). Note that photons ignore the "no_shadow" keyword. You should use photons {pass_through} if you want photons to pass through objects unaffected.

yes

The photons may be diverging more than you expect. They are probably there, but you can’t see them since they are spread out too much

The base of my glass object is really bright. Why?

Use ignore_photons with that object.

Will area lights work with photon mapping?

Photons do work with area lights. However, normally photon mapping ignores all area light options and treats all light sources as point lights. If you would like photon mapping to use your area light options, you must specify the "area_light" keyword within the photons {} block in your light source's code. Doing this will not increase the number of photons shot by the light source, but it might cause regular patterns to show up in the rendered caustics (possibly splotchiness).

What do the stats mean?

In the stats, "photons shot" means how many light rays were shot from the light sources. "photons stored" means how many photons are deposited on surfaces in the scene. If you turn on reflection and refraction, you could get more photons stored than photons shot, since the each ray can get split into two.

Autostop

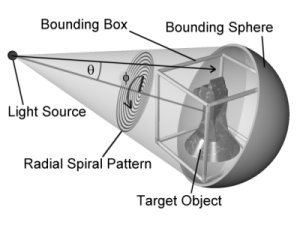

To understand the autostop

option, you need to understand the way photons are shot from light sources. Photons are

shot in a spiral pattern with uniform angular density. Imagine a sphere with a spiral

starting at one of the poles and spiraling out in ever-increasing circles to the equator.

Two angles are involved here. The first, phi, is the how far progress has been made in the

current circle of the spiral. The second, theta, is how far we are from the pole to the

equator. Now, imagine this sphere centered at the light source with the pole where the

spiral starts pointed towards the center of the object receiving photons. Now, photons are

shot out of the light in this spiral pattern.

To understand the autostop

option, you need to understand the way photons are shot from light sources. Photons are

shot in a spiral pattern with uniform angular density. Imagine a sphere with a spiral

starting at one of the poles and spiraling out in ever-increasing circles to the equator.

Two angles are involved here. The first, phi, is the how far progress has been made in the

current circle of the spiral. The second, theta, is how far we are from the pole to the

equator. Now, imagine this sphere centered at the light source with the pole where the

spiral starts pointed towards the center of the object receiving photons. Now, photons are

shot out of the light in this spiral pattern.

Normally, POV does not stop shooting photons until the target object’s entire bounding box has been thoroughly covered. Sometimes, however, an object is much smaller than its bounding box. At these times, we want to stop shooting if we do a complete circle in the spiral without hitting the object. Unfortunately, some objects (such as copper rings), have holes in the middle. Since we start shooting at the middle of the object, the photons just go through the hole in the middle, thus fooling the system into thinking that it is done. To avoid this, the autostop keyword lets you specify how far the system must go before this auto-stopping feature kicks in. The value specified is a fraction of the object's bounding box. Valid values are 0.0 through 1.0 (0% through 100%). POV will continue to shoot photons until the spiral has exceeded this value or the bounding box is completely covered. If a complete circle of photons fails to hit the target object after the spiral has passed the autostop threshold, POV will then stop shooting photons.

The autostop feature will also not kick in until at least one photon has hit the object. This allows you to use "autostop 0" even with objects that have holes in the middle.

Note: If the light source is within the object's bounding box, the photons are shot in all directions from the light source.

Adaptive Search Radius

Many scenes contain some areas with photons packed closely together and some areas where the photons are spaced far apart. This can cause problems, since, for speed reasons, a initial gather radius is used for a range-search of the photon map. Generally, this radius is automatically computed by statistically analyzing the photon map, but it can be manually specified (using the radius keyword) if the statistical analysis produces poor results.

The solution is the adaptive search radius. The gather keyword controls both the minimum and maximum number of photons to be gathered. If the minimum number of photons is not found in the original search radius, we can expand that radius and search again. The steps keyword allows you to specify how many total times to search. The default value for steps is 1.

Using the adaptive search radius correctly can both decrease the amount of time it takes to render the image, and sharpen the borders in the caustic patterns. Usually, using 2 (the default) as the number of steps is good (the initial search plus one expansion). Using too many expansions can slow down the render in areas with no photons, since the system searches, finds nothing, expands the radius, and searches again.

Sometimes this adaptive search technique can create unwanted artifacts at borders (see "Simulating Reflective and Refractive Caustics in POV-Ray Using a Photon Map", Nathan Kopp, 1999). To remove these artifacts, a few thresholds are used, which can be specified by expand_thresholds. For example, if expanding the radius increases the estimated density of photons by too much (threshold is percent_increase, default is 20%, or 0.2), the expanded search is discarded and the old search is used instead. However, if too few photons are gathered in the expanded search (expand_min, default is 40), the new search will be used always, even if it means more than a 20% increase in photon density.

Dispersion

Daren Scott Wilson’s dispersion patch has been incorporated into the photon mapping patch. To use it with photons, you need to specify a color_map in your light source. The color_map will override the light’s color (although you still need to specify the color of the light). The color_map determines the color spectrum for that light source.

I have created a macro, "create_spectrum", which creates a color_map for use with lights. This macro is based on Mr. Wilson’s dispersion patch. See the demo scene "prism.pov" for this macro.

Saving and Loading Photon Maps

It is possible to save and load photon maps to speed up rendering. The photon map itself is view-independent, so if you want to animate a scene that contains photons and you know the photon map will not change during the animation, you can save it on the first frame and then load it for all subsequent frames.

To save the photon map, put the line

save_file "myfile.ph"

into the photons { } block inside the global_settings section.

Loading the photon map is the same, but with load_file instead of save_file. You cannot both load and save a photon map in the POV file. If you load the photon map, it will load all of the photons as well as the range_divider value. No photons will be shot if the map is loaded from a file. All other options (such as gather radius) must still be specified in the POV scene file and are not loaded with the photon map.

Those who have mastered the old syntax for photons may wish to convert old scenes (prior to MegaPov 0.4 and UV-Pov) to the new syntax. This is actually relatively easy.

In each object, simply change the keyword separation to target. The parameter after it remains.

You probably didn't use global photons before, so you do not need to change light sources.

In global settings, either remove radius altogether (it will be automatically computed), or delete the second and third parameters (leaving the first parameter).

If you used a "phd" variable in you scene, you can use the spacing keyword within global_settings. The value specified after spacing will adjust the spacing for all objects that are targets. spacing does not automatically adjust the radius, however.

If you wish to use only one gather step, add the line "steps 1"

inside global_settings.

The steps keyword does the same

thing that the second parameter to radius used to do.

If you used media photons, you'll have to change things a bit. The new syntax is:

media <max_steps> [,<factor>]

You're done

Yes, it is that easy to convert old scenes. And now it is even easier to create new scenes that use photons.

Author: Nathan Kopp

The phong and specular highlighting models available in POV-Ray are alright, but they are quite simplified models. A better model has been developed over the years. This is the Torrance-Sparrow-Blinn-Cook microfacet highlight model.

Blinn highlights (as they will be called) uses statistical methods to simulate microfacets on the surface to produce the highlight. The model uses the fresnel reflectivity equation, which determines reflectivity from the IOR (index of refraction) of the material. For this reason, you must use an interior with an IOR if you want to use blinn highlights.

As with phong and specular, the size blinn highlights can be adjusted. To do this, use the facets keyword. The float value following this keyword specifies the average (r.m.s.) slope of the microfacets. A low value means shallow microfacets, which leads to small highlights. A high value means high slope (very bumpy), which leads to large, soft highlights.

Example:

sphere {

0.0, 1

texture {

pigment {radial frequency 8}

finish{ blinn 1 facets .2 }

}

interior {

ior 20 // this is a guess for IOR

}

}

In "ordinary" specular reflection, the reflected ray hits a single point, so there is no dispute as to its color. But many materials have microscopic "bumpiness" that scatters the reflected rays into a cone shape, so the color of a reflected "ray" is the average color of all the points the cone hits.

POV-Ray cannot trace a cone of light, but it CAN take a statistical sampling of it by tracing multiple "jittered" reflected rays.

Normally, when a ray hits a reflective surface, another ray is fired at a matching angle, to find the reflective color. When you specify a blurring amount, a vector is generated whose direction is random and whose length is equal to the blurring factor. This "jittering" vector is added to the reflected ray's normal vector, and the result is normalized because weird things happen if it isn't.

One pitfall you should keep in mind stems from the fact that surface normals always have a length of 1. If you specify a blurring factor greater than one, the reflected ray's direction will be based more on randomness than the direction is "should" go, and it's possible for a ray to be "reflected" THROUGH the surface it's supposed to be bouncing off of.

Since having reflected rays going all over the place will introduce a certain amount of statistical "noise" into your reflections, you have the option of tracing more than one jittered ray for each reflection. The colors found by the rays are averaged, which helps to smooth the reflection. In a texture that already has some noise in it from the pigment, or if you're not using a lot of blurring, 5 or 10 samples should be fine. If you want to make a smooth flat mirror with a lot of blurring you may need upwards of 100 samples per pixel. For preview renders where the reflection doesn't need to be smooth, use 1 sample, since it renders as fast as unblurred reflection.

reflection_blur

Specifies how much blurring to apply. Put this in finish. A vector with this length and a random direction is added to each reflected ray's direction vector, to jitter it.

reflection_samples

Specifies how many samples per pixel to take. It may also be placed in global_settings to change the default without having to specify a whole default texture. Each time a ray hits a reflective surface, this many jittered reflected rays are fired and their colors are averaged. If the finish has a reflection_blur of zero, only one sample will be used regardless of this setting.

Author: Nathan Kopp

One of the features in MegaPov is variable reflection, including realistic Fresnel reflection (see the chapter about 'Variable reflection'). Unfortunately, when this is coupled with constant transmittance, the texture can look unrealistic. This unrealism is caused by the scene breaking the law of conservation of energy. As the amount of light reflected changes, the amount of light transmitted should also change (in a give-and-take relationship).

This can be achieved in MegaPov by adding "conserve_energy" to the object's finish{}. When conserve_energy is enabled, POV will multiply the amount filtered and transmitted by what is left over from reflection (for example, if reflection is 80%, filter/transmit will be multiplied by 20%). This (with a nice granite normal and either media or realistic fade_power) can be used to produce some very realistic water textures.

Many materials tint their reflected light by their surface color. A red Christmas-ornament ball, for example, reflects only red light; you wouldn't expect it to reflect blue. Since the "reflection" keyword takes a color vector, the common "reflection 1" actually gets promoted to "reflection rgb <1, 1, 1>", which would make the ornament reflect green and blue light as well. You'd have to say "reflection Red" to make the ornament reflect correctly.

But what happens if an object's color is different on parts of it? What happens, for example, if you have a Christmas ornament that's red on one side and yellow on the other, with a smooth fade between the two colors? The red side should only reflect red light, but the yellow side should reflect both red and green. (yellow = red + green) Ordinarily, there is no way to accomplish this.

Hence, there is a new feature: metallic reflection. It kind of corresponds to the "metallic" keyword, which affects Phong and specular highlights, but metallic reflection multiplies the reflection color by the pigment color at each point to determine the reflection color for that point. A value of "reflection 1" on a red object will reflect only red light, but the same value on a yellow object reflects yellow light.

reflect_metallic

Put this in finish.

Multiplies the "reflection" color vector by the pigment color at each point

where light is reflected to better model the reflectivity of metallic finishes. Like the

"metallic" keyword, you can specify an

optional float value, which is the amount of influence the reflect_metallic keyword has on the reflected color.

If this number is omitted it defaults to 1.

Note by Nathan Kopp: I have modified this to behave more like the "metallic " keyword, in that it uses the Fresnel equation so that the color of the light is reflected at glancing angles, and the color of the metal is reflected for angles close to the surface’s normal.

Many materials, such as water, ceramic glaze, and linoleum are more reflective when viewed at shallow angles. POV-Ray cannot simulate this, which is a big impediment to making realistic images sometimes.

The only real reflectivity model I know of right now is the Fresnel function, which uses the IOR of a substance to calculate its reflectivity at any given angle. Naturally, it doesn't work for opaque materials, which don't have an IOR.

However, in many cases it isn't the opaque object doing the reflecting; ceramic tiles, for instance, have a thin layer of transparent glaze on the surface, and it is the glaze (which -does- have an IOR) that is reflective.

However, for those "other" materials, I've extended the standard reflectivity function to use not one but TWO reflectivity values. The "maximum" value is the reflectivity observed when the material is viewed at an angle perpendicular to its normal. The "minimum" is the reflectivity observed when the material is viewed "straight down", parallel to is normal. You CAN make the minimum greater than the maximum - it will work, although you'll get results that don't occur in nature. The "falloff" value specifies an exponent for the falloff from maximum to minimum as the angle changes. I don't know for sure what looks most realistic (this isn't a "real" reflectivity model, after all), but a lot of other physical properties seem to have squared functions so I suggest trying that first.

reflection_type

chooses reflectivity function.

The default reflection_type is

zero, which has new features but is backward-compatible. (It uses the 'reflection' keyword.)

A value of 1 selects the Fresnel reflectivity function, which calculates reflectivity

using the finish's IOR. Not useful for opaque textures, but remember that for things like

ceramic tiles, it's the transparent glaze on top of the tile that's doing the reflecting.

Also, Fresnel reflection (reflection_type 1) now pays attention to the reflection_min and reflection_max settings, including colors. The old-style Fresnel reflection always used reflection_min 0.0 and reflection_max 1.0. If reflection_max is zero (or not specified), then MegaPov will default to 0.0 and 1.0 if you use reflection_type 1

reflection_min

sets minimum reflectivity.

For reflection_type 0, this is how reflective the surface will be when viewed from a

direction parallel to its normal.

For reflection_type 1, this will be the minimum reflection.

reflection_max

sets maximum reflectivity.

For reflection_type 0, this is how reflective the surface will be when viewed at a

90-degree angle to its normal.

For reflection_type 1, this will be the maximum reflection.

You can make reflection_min less than reflection_max if you want, although the result is something that doesn't occur in nature.

reflection_falloff

sets falloff exponent in reflection_type 0.

This is the exponent telling how fast the reflectivity will fall off, i.e. linear,

squared, cubed, etc.

reflection

convenience and backward compatibility

This is the old "reflection" keyword. It sets reflection_type to 0, sets both

reflection_min and reflection_max to the value provided, and reflection_falloff to 1.

Authors: Mike Hough (method 2) and Nathan Kopp (method 3)

The new keyword in media, sample_method, can be 1, 2 or 3.

Sample method 1 is the old method of taking samples.

Sample method 2 (invoked by adding the line "method 2" to the

media code) distributes samples evenly along the viewing ray or light ray. The latter can

make things look smoother sometimes.

If you specify a max samples higher than the minimum samples, POV will take additional

samples, but they will be random, just like in method 1. Therefore, I suggest you set the

max samples equal to the minimum samples.

Jitter will cause method 2 to look similar to method 1. It should be followed by a float,

and a value of 1 will stagger the samples in the full range between samples.

Sample method 3 (invoked by adding the line "method 3" to the media code) uses adaptive sampling (similar to adaptive anti-aliasing) which is very much like the sampling method used in POV-Ray 3.0's atmosphere. This code was written from the ground-up to work with media, however.

Adaptive sampling works by taking another sample between two existing samples if there

is too much variance in the original two samples. This leads to fewer samples being taken

in areas where the effect from the media remains constant.

You can specify the anti-aliasing recursion depth using the "aa_level" keyword followed by an integer. You can

specify the anti-aliasing threshold by using the "aa_threshold" followed by a float. The default for "aa_level" is 4 and the default

"aa_threshold" is 0.1.

Jitter also works with method 3.

Sample method 3 ignores the maximum samples value.

It's usually best to only use one interval with method 3. Too many intervals can lead to artifacts, and POV will create more intervals if it needs them.

syntax

media {

method 2

jitter

}

Have you ever had POV stop half-way through a render with a "Too few sampling intervals" error? Well, this made me mad on a few occasions (media renders take a long time without having to re-start in the middle). So, if UVPov determines that you need more intervals than you specify (due to spotlights), it will create more for you automatically. So, I suggest always using one interval with method 3.

Author: Lummox JR, July 1999 (beta 0.91)

The isoblob combines the traits of a blob and an isosurface, allowing blob-like components to be specified with user-supplied density functions. The result isn't *perfect*, and nowhere near as good-looking as a regular blob is where simple shapes are involved, but it's not half-bad.

This is the complete isoblob patch, including the function-normal patch it may rely on for more detailed surface normals.

isoblob {

threshold <THRESHOLD> // similar to blob threshold, not to isosurface

[accuracy <ACCURACY>]

[max_trace <MAX_TRACE>] // maximum intersections per interval -- default is quite high

[normal [on | off]] // Accurate normal calculation -- default is "off", using old method.

// The function-normal patch makes this keyword available to the

// isosurface and parametric primitives as well

// Functions

// <x, y, z> -- coordinates in component space

// <r, s, t> -- coordinates in isoblob space

// Functions should generally return a value from 0 to 1.

function { ... } // function 1 -- at least one is required

[function { ... } ...] // functions 2 and up

// Components

// Strength is multiplied by the density function to yield true density, just as in blobs

// Function numbers start at 1, and go in order of definition.

// Spherical bounding shape

// Translated so that component space is centered at <0,0,0>

sphere {

<CENTER>, <RADIUS>, [strength] <STRENGTH>

[function] <FUNCTION NUMBER>

// Insert any other blob-component modifiers here.

}

// Cylindrical bounding shape

// Transformed so that component space is centered around axis from

// <0, 0, 0> to <0, 0, length>

cylinder {

<END1>, <END2>, <RADIUS>, [strength] <STRENGTH>

[function] <FUNCTION NUMBER>

// Insert any other blob-component modifiers here.

}

}

New functions / values allowed for isosurfaces, media, etc.:

Apply accurate normal calculation to isoblobs by using the "normal" keyword (outside of a texture). This can be followed by on/off, true/false, 1/0, etc. The default is to use close approximation, the original method of choice for isosurfaces and parametrics.

Rendering time seems comparable between ordinary calculation and accurate. The more accurate normal calculation may cause a very slight slowdown, but in most cases the difference should be hard to notice.

Any of these functions that are encountered have their normals "fudged" in the same way a normal would otherwise be found for the main function -- by close approximation. There's no point in going through the accurate-normal process in these cases unless accuracy is an overriding concern.

atan2 () doesn't return a proper normal. Anyone with the brains to fix this is welcome to try; I wash my hands of it. I've tried literally everything I could think of, usually at least twice. (The NORMAL_ macros in f_func.c are pretty straightforward; the calculus used is harder).

Many isoblobs may render faster with accurate normals, because of the overhead of transforming x,y,z into component space six times with the close-approximation method.

Q: What is an isoblob?

A: An isoblob is a combination of an isosurface and a blob. An ordinary blob is a combination of shapes with different densities. An isosurface, a primitive type which exists in the Superpatch, can define its surface by a function. The isoblob takes the blob concept and modifies it, so that the density of each component is specified by a function, and thus things like random noise can be included in the density function. Density functions can be defined a lot more specifically and accurately, and with much more flexibility. Plus, more complex shapes are possible than with either a blob or an isosurface alone.

Q: What are the advantages of an isoblob?

A:

Greater flexibility than a blob. A blob can only do two specific types of shapes. Even with heavy modification, it could do few more. Toruses cannot be used in a blob, nor can more complicated functions. A simple box is beyond the capability of a modified blob primitive.

Greater complexity than an isosurface. Even if density functions were added together directly to be combined in a single function, that one function would be overly complicated. By transforming a component's coordinates, its density function can be simplified. And for larger objects with many components, a single function would be too inadequate (and slow) to define the whole thing, even if the function could be made big enough.

Better speed than several isosurface functions. If multiple isosurfaces interacted by adding density values like a blob, the result would be very slow to calculate. In ordinary blobs, the shapes only interact in a few small regions where they overlap. It is easier to calculate density values only where their density could be greater than zero. Thus the ability to set a bounding shape for each component is a great advantage in speed.

Q: What are the disadvantages of an isoblob?

A:

Slower than a blob. A blob is a fine-tuned shape, designed to break things up into small intervals where the shape can be treated like a quartic. It's many times faster than trying to solve for the surface of a function.

Slower than an isosurface. An isosurface uses only a single function, whereas an isoblob breaks up solutions into different intervals where a ray intersects bounding shapes, just like a blob.

Fewer options for finding solutions than an isosurface. An isosurface has the sign value, different methods for solving, etc. The isoblob uses only method 1, assumes a sign of -1 (because the functions are for density values), etc.

Q: Why are the components called "sphere" and "cylinder"?

A: The sphere and cylinder keywords are used to define the bounding shape of each component. A spherical component centered at <5,6,7> with radius 3 will have x, y, and z with a distance of up to 3 units from the origin; the component is then translated to <5,6,7>. (Its <r,s,t> values are centered around <5,6,7>, not the origin.) Similarly, a cylindrical component has x, y, and z values from <0,0,0> to <0,0,length>, length being the length of the cylinder from end to end, and its x and y values stay within the cylinder's radius.

Q: What are the r, s, and t values and how do I use them?

A: <r,s,t> is a vector representing a point in isoblob space, whereas <x,y,z> is the point in component space. Each component has its <x,y,z> values transformed so that all spheres are centered at the origin and all cylinders begin at the origin. However, it is often useful to know where a point will fall after the component has been scaled, rotated, translated, etc. into its final position (within the isoblob, not within the world). Think of <x,y,z> as being the component's personal set of coordinates, and <r,s,t> as the isoblob's. (Note that since components are meant to overlap, it's quite possible for the same <r,s,t> point to have different sets of corresponding <x,y,z> values, one set for each component.

Q: What are some common density functions to use?

A: These are some common density functions, ranging from 0 to 1. Remember that components can be scaled so that different components use the same density function. Squaring a density function will cause more curving where different components meet; if you do this to every density function, be sure to square the threshold also. It is also a good idea to include max(...,0) to avoid negative densities.

Sphere max( 1-sqrt(sqr(x)+sqr(y)+sqr(z))/radius ,0)

Cylinder max( 1-(sqr(x)+sqr(y))/radius ,0)

Box (<x,y,z> from -1 to 1)max( 1-max(max(abs(x),abs(y)),abs(z)) ,0)

Cone (point at z=height) max( 1-z/height-sqrt(sqr(x)+sqr(y))/base_radius ,0)

Torus (around z axis) max( 1-sqrt( sqr( sqrt(sqr(x)+sqr(y))-major_radius )

+sqr(z) )/minor_radius ,0)

Paraboloid (tip at z=height)max( 1-z/height-(sqr(x)+sqr(y))/base_radius ,0)

Helix (one rotation) max( 1-sqrt( sqr(x-major_radius*cos(z*2*pi/height))

+ sqr(y-major_radius*sin(z*2*pi/height) )/minor_radius ,0)

Old blob-style density functions

Blob Sphere sqr(max( 1-sqr((sqr(x)+sqr(y)+sqr(z))/radius) ,0))

Blob Cylinder sqr(max( 1-sqr((sqr(x)+sqr(y))/radius) ,0))

(Ordinary blob cylindrical components have hemisphere end-caps,

which are not included in this function. To accurately simulate an

ordinary blob, you'll need to include the hemispheres manually.)

Blob Base Hemisphere if(z,0, sqr(max( 1-sqr((sqr(x)+sqr(y)+sqr(z))/radius) ,0)))

Blob Apex Hemisphere (translated to end of cylinder) if(z, sqr(max( 1-sqr((sqr(x)

+sqr(y)+sqr(z))/radius) ,0)) ,0)

Author: R. Suzuki

THERE IS NO MANUAL AVAILABLE FOR THE ISOSURFACE PATCH. What follows are tids and bits collected from internet. You will have to read this and look at the examples, then try a few things and see what happens.

Description: With the isosurface patch, you can make iso-surfaces and parametric surfaces for various 3D functions.

You can specify the function both with f(x,y,z) in POV files, and with the name of the functions.

The iso-surface can be used as a solid CSG shape type.

Syntax of "isosurface" object

isosurface {

function { f1(x, y, z) [ | or & ] f2(x, y, z) .... }

or function { "functionname", <P0, P1, ..., Pn > }

// P0, P1,..., Pn are parameters for functionname(x,y,z)

// function (x, y, z) < 0 : inside for solid shapes.

contained_by { box {<VECTOR>, <VECTOR> } } // container shape

or contained_by { sphere {<VECTOR>, FLOAT_VALUE } }

[ accuracy FLOAT_VALUE ]

[ max_trace INTEGER_VALUE [or all_intersections] ]

[ threshold FLOAT_VALUE ]

[ sign -1 ] // or 1

[ max_gradient FLOAT_VALUE]

[ eval ] // evaluates max_gradient value

[ method 1 ] // or 2

[ normal [on | off]] // Accurate normal calculation -- default is "off", using old method.

[ open ] // clips the isosurface with the contained_by shape

.....

}

Note: method 2 requires max_gradient value (default max_gradient is 1.1).

The simplest example is:

isosurface { function "sphere", <1> }

The 'function' keyword specifies the potential function you want to see. In this case, it is the "sphere" function which will give you a unit sphere when the parameter is <1>. This can be scaled, rotated and translated.

For an overview of possible built-in function keywords, see further on.

To reduce calculation time, "isosurface" searches the equipotential surface in a finite container region (sphere or box). You can change this region using the "contained_by" keyword with a box or sphere

Note: contained_by {} must be specified or an error message will be given.

isosurface {

function { "sphere", <6> }

contained_by { box { <-5, -5, -5>, <5, 5, 5> } }

}

If there is a cross section with the container, "open" allows you to remove the surface on the containing object. On the other hand, if you use "contained_by" without "open", you will see the surface of the container. If you want to use isosurfaces with CSG operations, do not use "open" (and you may have to use "max_trace" in some cases).

[default THRESHOLD_VALUE = 0.0 ]

The potential function of "sphere" is f(x, y, z) = P0 - sqrt(x*x+y*y+z*z)

By default, POV-Ray searches the equipotential surface of f=0. You can change this value using the "threshold" keyword. Then POV-Ray looks for the point where the value of the function equals to the THRESHOLD_VALUE.

isosurface {

function { "sphere", <1> }

threshold 0.3

}

In the case of this example, the radius of the sphere is 0.7.

[default = 1 ]

The "sign" keyword specifies which region is inside or outside.

"sign 1": f(x, y, z) -THRESHOLD_VALUE <0 inside

"sign -1": f(x, y, z) -THRESHOLD_VALUE <0 outside

[default MAX_TRACE = 1 ]

This is either an integer or the keyword all_intersections and it specifies the number of intersections to be found with the object. Unless you’re using the object in a CSG, 1 is usually sufficient.

"isosurface" can be used in CSG (CONSTRUCTIVE SOLID GEOMETRY) shapes since "isosurface" is a solid finite primitive. Thus you can use "union", "intersection", "difference", and "merge". By default, "isosurface" routinely searches only the first surface which the ray intersects. However, in the case of "intersection" and "difference", POV-Ray must find not only the first surface but also the other surfaces. For instance, the following gives us an improper shape.

difference {

sphere { <-0.7, 0, 0.4>, 1 }

isosurface {

function { "sphere", <1> }

translate <0.7, 0, 0>

}

}

In order to see a proper shape, you must add the "max_trace" keyword.

difference {

sphere { <-0.7, 0, 0.4>, 1}

isosurface {

function { "sphere", <1> }

max_trace 2

translate <0.7, 0, 0>

}

}

[default MAX_GRADIENT = 1.1 ]

The "isosurface" finding routine can find the first intersecting point between a ray and the equipotential surface of any continuous functions if the maximum gradient of the function is known. By default, however, POV-Ray assumes the maximum to be 1. Thus, when the real maximum gradient of the function is 1,

e.g. f= P0-(x*x+y*y+z*z), it will work well.

In the case that the real maximum gradient is much lower than 1, POV-Ray can produce the shape properly but the rendering time will be long. If the maximum gradient is much higher than 1, POV-Ray cannot find intersecting points and you will see an improper shape. In these cases, you should specify the maximum gradient value using the "max_gradient" keyword.

If you do not know the max_gradient of the function, you can see the maximum gradient value in the final statistics message if you add the eval option. If the max_gradient value of the final render is greater than that of the POV file, you should change the max_gradient value.

If you set the eval option without the max_gradient keyword, POV-Ray will try to estimate maximum gradient semi-automatically using results of neighboring pixels. However, this is not a perfect method and sometimes you have to use fixed max_gradient or additional parameters for the eval option.

Since the initial max_gradient estimation value is 1, estimation could work well when the real maximum gradient is around 1 (usually, from 0.5 to ~5). If the real maximum gradient is far from 1, the estimation will not work well. In this case, you should add optional three parameters to eval option.

eval <V1, V2, V3> // where V1, V2, V3 are float values.

The first parameter, V1, is the initial estimation value instead of 1. This means the minimum max_gradient value in the estimation process is V1. The second parameter is the over-estimation parameter (V2 should be 1 or greater than 1) and the third parameter is an attenuation parameter (V3 should be 1 or less than 1). Default is <1, 1.2, 0.99>. If you see an improper shape and want to change the default, you should change (increase) V1 at first.

[ default ACCURACY = 0.001 ]

This value is used to determine how accurate the solution must be before POV stops looking. The smaller the better, but smaller values are slower too.

As "isosurface" finding is a kind of iteration method, you can specify the accuracy of the intersecting point using the "accuracy" keyword to optimize the rendering speed. The default "accuracy" value is 0.001. The higher value (float) will give us faster speed but lower quality. For example,

isosurface {

function { "torus", <0.85, 0.15> }

contained_by { box { <-1,-0.15,-1>, <1, 0.15, 1> } }

accuracy ACCURACY

}

Recursive subdivision method. The equipotential-surface finding in POViso patch is based on a recursive subdivision method as following.

POV-Ray calculates the potential values at the two points (d1 and d2, where d1<d2: the distance from the initial point of the ray).

If there is a possibility (possibility is calculated with a testing function T(f(d1),f(d2), MAX_GRADIENT)) of existence of the equipotential-surface between 'd1' and 'd2', POV-Ray calculates potential value at another point 'd3' on the ray between the two point 'd1' and 'd2'.

If there is a possibility between 'd1' and 'd3', POV-Ray calculates another point 'd4' between 'd1' and 'd3'.

If there is no possibility between 'd1' and 'd3', POV-Ray looks for another point 'd4' between 'd3' and 'd2'.

These calculation (1-4) will be done recursively until (dn-dn')<"ACCURACY".

This POV-Ray version provides two isosurface finding methods. Both methods are based on recursive subdivision. In default, "method 1" is used for parsing functions and "method 2" is used for the functions specified by the name.

Generally, POV-Ray automatically selects the method and the user does not need to specify this keyword.

Author: Lummox JR

Apply accurate normal calculation to isosurfaces and parametrics by using the "normal" keyword (outside of a texture). This can be followed by on/off, true/false, 1/0, etc. The default is to use close approximation, the original method of choice for isosurfaces and parametrics.

Rendering time seems comparable between ordinary calculation and accurate. The more accurate normal calculation may cause a very slight slowdown, but in most cases the difference should be hard to notice.

Anything with a built-in function (Sphere, Helix, etc.) or a pigment function, or anything with noise3d (), should probably use ordinary normal calculation ("normal off") vs. accurate normal if speed is important.

Any of these functions that are encountered have their normals "fudged" in the same way a normal would otherwise be found for the main function -- by close approximation. There's no point in going through the accurate-normal process in these cases unless accuracy is an overriding concern.

atan2 () doesn't return a proper normal. Anyone with the brains to fix this is welcome to try; I wash my hands of it. I've tried literally everything I could think of, usually at least twice. (The NORMAL_ macros in f_func.c are pretty straightforward; the calculus used is harder).

Parametrics may benefit most, speedwise, from a more accurate normal calculation. Depending on the functions used, there may (potentially) even be a minor increase in speed.

Several useful functions are provided in this patch (if known, nr of required parameters and what they control are given):

Added by: Matthew Corey Brown e-mail: <mcb@xenoarch.com>

Manual by: David Sharp e-mail: <dsharp@interport.net>

syntax

function {"ridgedmf", <P0, P1, P2, P3, P4> }ridgedMF(x,0,z) <P0,P1,P2,P3,P4> ("ridged multifractal") can be used to create multifractal height fields and patterns. The algorithm was invented by Ken Musgrave (and others) to simulate natural forms, especially landscapes. 'Multifractal' refers to their characteristic of having a fractal dimension which varies with altitude. They are built from summing noise of a number of frequencies. The ridgedMF parameters <P0, P1, P2, P3, P4> determine how many, and which frequencies are to be summed, and how the different frequencies are weighted in the sum.

An advantage to using these instead of a height_field{} from an image (a number of height field programs output multifractal types of images) is that the ridgedMF function domain extends arbitrarily far in the and z directions so huge landscapes can be made without losing resolution or having to tile a height field.

The function parameters <P0, P1, P2, P3, P4>

(The names given to these parameters refer to the procedure used to generate the function's values and the names don't seem to apply very directly to the resulting images.)

P0 = 'H' is the negative of the exponent of the basis noise frequencies used in building these functions (each frequency f's amplitude is weighted by the factor f - H). In landscapes, and most natural forms, the amplitude of high frequency contributions are usually less than the lower frequencies. When H is 1, the fractalization is relatively smooth ("1/f noise"). As H nears 0, the high frequencies contribute equally with low frequencies (as in "white noise").

P1 = 'Lacunarity' is the multiplier used to get from one 'octave' to the next in the 'fractalization'. This parameter affects the size of the frequency gaps in the pattern. Make this greater than 1.0

P2 = 'Octaves' is the number of different frequencies added to the fractal. Each 'Octave' frequency is the previous one multiplied by 'Lacunarity', so that using a large number of octaves can get into very high frequencies very quickly. (Normally, the really high frequencies contribute little, and will just waste your computing resources.)

P3 = 'Offset' gives a fractal whose fractal dimension changes from altitude to altitude (this is what makes these 'multifractals'). The high frequencies at low altitudes are more damped than at higher altitudes. so that lower altitudes are smoother than higher areas. As Offset increases, the fractal dimension becomes more homogeneous with height.

(Try starting with Offset approximately 1.0).P4 = 'Gain' weights the successive contributions to the accumulated fractal result to make creases stick up as ridges.

Author: David Sharp

syntax:

function {"heteroMF", <H, L, Octs, Offset, T> }heteroMF(x,0,z) <P0,P1,P2,P3,P4> ("hetero multifractal") makes multifractal height fields and patterns of '1/f' noise. 'Multifractal' refers to their characteristic of having a fractal dimension which varies with altitude. Built from summing noise of a number of frequencies, the heteroMF parameters <P0, P1, P2, P3, P4> determine how many, and which frequencies are to be summed.

An advantage to using these instead of a height_field{} from an image (a number of height field programs output multifractal types of images) is that the heteroMF function domain extends arbitrarily far in the and z directions so huge landscapes can be made without losing resolution or having to tile a height field.

The function parameters <P0, P1, P2, P3, P4>

P0 ='H' is the negative of the exponent of the basis noise frequencies used in building these functions (each frequency f's amplitude is weighted by the factor f - H). In landscapes, and many natural forms, the amplitude of high frequency contributions are usually less than the lower frequencies. When H is 1, the fractalization is relatively smooth ("1/f noise"). As H nears 0, the high frequencies contribute equally with low frequencies as in "white noise".

P1 ='Lacunarity' is the multiplier used to get from one 'octave' to the next. This parameter affects the size of the frequency gaps in the pattern. Make this greater than 1.0

P2 ='Octaves' is the number of different frequencies added to the fractal. Each 'Octave' frequency is the previous one multiplied by 'Lacunarity', so that using a large number of octaves can get into very high frequencies very quickly.

P3 ='Offset' is the 'base altitude' (sea level) used for the heterogeneous scaling.

P4 ='T' scales the 'heterogeneity' of the fractal. T=0 gives 'straight 1/f' (no heterogeneous scaling). T=1 suppresses higher frequencies at lower altitudes.

syntax:

function {"hex_x",<0>}function {"hex_y",<0>}The parameter <0> is needed, even if it is only a 'dummy'.

Creates a hexagon pattern. See hex.pov and hex_2.pov in the demo folder for examples.

Build in functions coming from the 'i_algbr' library (+ <nr of parameters needed>).

Build in functions coming from the 'i_nfunc' library.

Build in functions coming from the 'i_dat3d' library.

Manual by: David Sharp

Normally, isosurfaces use a formula to find the values of the isosurface function at the various points in space. On the other hand, one might want to render data for which there is no simple formula, like temperature at different points in a room or data from a CAT scan. In this case, you can make an isosurface from an array of data using the i_dat3d library functions. These are basically the same as the 'formula' isosurfaces, except that the values of the function are given only at discrete 'sample points' and are read from a file or array (rather than calculated from a formula). The values for the function at points in space between sample points are interpolated from the given data. Once declared, they can be combined with other defined isosurface functions in the 'usual' ways. The i_dat3d library functions currently available are:

Functions of the 'i_dat3d' library:

- "data_2D_1" <P0>: a 'height field' over the x-z plane. Points between data points are linearly interpolated (first order). The y sample parameter is ignored.

- "data_2D_3" <P0>: like data_2D_1, but the function values between sample points comes from a cubic interpolation (3rd order), and so the surface is usually smoother.

- "data_3D_1" <P0>: a 3D isolevel surface. Points between data points are linearly interpolated (1st order).

- "data_3D_3" <P0>: like data_3d_1, but the function values between sample points comes from a cubic interpolation (3rd order).

- "odd_sphere" <P0,P1,P2>: This adds data_3d_3 to a sphere.

odd_sphere=P2*sqrt(x*x+y*y+z*z)-P1+data_3D_3

"i_dat3d" calculates (1st or 3rd order) interpolated values from a 3D (or 2D) density distribution data. The distribution data should be specified by the file name in pov file, and will be loaded in the initialize routine.

Example i_dat3d function declaration

#declare MyDataFunction = function{"data_3D_1", <1> library "i_dat3d","MyData.DAT", <32,32,16,0>}The "data_3D_1" names which of the i_dat3d library functions to use.

The parameter vector <P0> scales the data.

library "i_dat3d" tells MegaPov that this function requires special initialization. That is, it must read a data file; in this example it would read data from the file "MyData.DAT".

The final vector <32,32,16,0> contains the parameters for the i_dat3d library. : <L0,L1,L2,L3>

- L0 = number of samples in the x direction

- L1 = number of samples in the y direction

- L2 = number of samples in the z direction

In the above example (<32,32,16,0>), the data_3d_1 function 'expects' to find 32*32*16 values in the file "MyData.Dat" arranged as 16 z-planes, each of which is made of 32 y-rows, each row being values at 32 points parallel to 'x'.- L3 tells how the data in the file is formatted:

- 0: text (as could be written out using POV scene language #fwrite()

- 1: 1 byte binary integers

- 2: 2 byte binary integers

- 3: 4 byte binary integers

- 4: 4 byte binary floats

- 5: .DF3 file (POV density file format), but ignore the DF3 file's header sample parameters.

- 6: .DF3 (POV density file format), get the sample parameters (Nx, Ny, Nz) from the DF3 file's header and just ignore the sample parameters found in the library parameter vector <Nx,Ny,Nz,6>

When the data comes from a POV array, the 'L3' library parameter is unnecessary / ignored.

Using a file as the data source, the file must exist before the function is declared. You can also use a POV array as the 'data source'. In this case, you would replace the name of the data file with the name of a predeclared and initialized array of numerical values. Using arrays, function values can be computed in POV scene language using #macros and complex means that would be impossible or impractical from a normal isosurface function formula.

Example declaration of i_dat3d function with an array as data source

#declare MyDataArray=array[32][32][16] // needs some routine to fill the array with data #declare MyDataFunction = function{ "data_3D_1",<1> library "i_dat3d", MyDataArray, <32,32,16>}

Keywords:

z , accuracy, max_gradient, max_trace, func, function, no_eval, eval, lib_name, library, func_id, implicit, r, s, ln, cub, noise3d, all_intersections, func_xy, parametric, isosurface, method, close, sign, int_f, max_grad, precompute, last, contained_by

Functions and operators available:

Two iso-surface finding methods are provided. Both methods are based on recursive subdivision.

In default, "method 1", written by D.Skarda & T.Bily, is used for parsing functions and "method 2", written by R. Suzuki, is used for the functions specified by the name.

Generally, "method 2" is faster than "method 1" for internal functions but it requires information on the maximum gradient of the 3D function.

You can see various examples on

http://www.etl.go.jp/etl/linac/public/rsuzuki/e/povray/iso/

http://atrey.karlin.mff.cuni.cz/~0rfelyus/povray.html

for a beginners tutorial, check out

http://members.aol.com/stbenge/

In addition to the standard mathematical functions, you may use the new pigment function:

syntax:

function { pigment { ... } }

This function will return a value based on the red and green values of the color at the specified point in the pigment. Red is the most significant and green is the least. This is in case you want to use height_field files that use the red and green components. Otherwise just use gray scale colors in your pigment.

This won't work with slope based patterns. You can use them but you'll always get a constant value when its looked up.

parametric {

function x (u,v) , y (u,v) , z (u,v)

<u1,v1>, <u2,v2>

<x1,y1,z1>, <x2,y2,z2>

[accuracy ACCURACY]

[precompute DEPTH, VarList]

}

<u1,v1>, <u2,v2>: boundaries to be computed in (u,v) space.

<x1,y1,z1>, <x2,y2,z2>: bounding box of the function in real space.

Accuracy: float value. This value is used to determine how accurate the solution must be before POV stops looking. The smaller the better, but smaller values are slower too.

precompute can speedup rendering of parametric surfaces. It simply divides parametric surfaces into small ones (2^depth) and precomputes ranges of the variables(x,y,z) which you specify after depth. Be careful! High values of depth can produce arrays greater than amount of your RAM.

Example:

parametric {

function

u*v*sin (24*v),

v,

u*v*cos (24*v)

<0,0>, <1,1>

<-1.5,-1.5,-1.5>, <1.5,1.5,1.5>

accuracy 0.001

precompute 15, [x, z] // precompute in y does not gain

// any speed in this case

}

If you declare a parametric surface with the precompute keyword and then use it twice, all arrays are in memory only once.

Author: Nathan Kopp

Triangle mesh objects (mesh and mesh2) can now be used in CSG objects such as difference and intersect, because they do have a defined 'inside.' This will only work for well-behaved meshes, which are completely closed volumes. If meshes have any holes in them, this might work, but the results are not guaranteed.

To determine if a point is inside a triangle mesh, POV-Ray shoots a ray from the point in some arbitrary direction (the default is <1, 0, 0>). If this vector intersects an odd number of triangles, the point is inside the mesh. If it intersects an even number of triangles, the point is outside of the mesh. You can specify the direction of this vector. For example, to use +z as the direction, you would add the following line to the triangle mesh description (following all other mesh data, but before the object modifiers).

inside_vector <0, 0, 1>

This change does not have any effect on unions of triangles... these will still be always hollow.

Author: Nathan Kopp

The new mesh syntax is designed for use in conversion from other file formats. This format was developed by Nathan Kopp and Thomas Baier.

mesh2 {

vertex_vectors {

number_of_vertices,

<vertex1>, <vertex2>, ...

}

normal_vectors {

number_of_normals,

<normal1>, <normal2>, ...

}

uv_vectors {

number_of_uv_vectors,

<uv_vect1>, <uv_vect2>, ...

}

texture_list {

number_of_textures,

texture { Texture1 },

texture { Texture2 }, ...

}

face_indices {

number_of_faces,

<index_a, index_b, index_c> [,texture_index [, texture_index, texture_index]],

<index_d, index_e, index_f> [,texture_index [, texture_index, texture_index]],

...

}

normal_indices {

number_of_faces,

<index_a, index_b, index_c>,

<index_d, index_e, index_f>,

...

}

uv_indices {

number_of_faces,

<index_a, index_b, index_c>,

<index_d, index_e, index_f>,

...

}

[object modifiers]

}

The normal_vectors, uv_vectors, and texture_list sections are optional. If the number of normals equals the number of vertices then the normal_indices section is optional and the indexes from the face_indices section are used instead. Likewise for the uv_indices section.

The indexes are ZERO-BASED! So the first item in each list has an index of zero.

You can specify both flat and smooth triangles in the same mesh. To do this, specify the smooth triangles first in the face_indices section, followed by the flat triangles. Then, specify normal indices (in the normal_indices section) for only the smooth triangles. Any remaining triangles that do not have normal indices associated with them will be assumed to be flat triangles.

To specify a texture for an individual mesh triangle, specify a single integer texture index following the face-index vector for that triangle.

To specify three textures for vertex-texture interpolation, specify three integer texture indices (separated by commas) following the face-index vector for that triangle.

Author: Daniel Skarda (RBezier v. 0.9b)

This patch extends the current bicubic_patch with two new types - one non-rational (type 2) and one rational (type 3).

It also adds a new object type called bezier_patch with arbitrary u,v order and trimmed_by option

Some features of the new method:

I hope that there are not any "one-pixel" holes as there are in type 1

And it renders bezier patches nearly at same speed as type 1. (ok. it is a little bit slower, but it does not require an additional huge amount of precomputed data).

Incorporating features of the new bezier_patch into the bicubic_patch would require more work.

The old syntax of the bicubic_patch was preserved. If you want to use the new method for computing ray/surface intersection you should write "type 2" in the beginning of the specification of the bicubic_patch and also use the new keyword "accuracy" instead of "flatness", "u_steps" and "v_steps".

bicubic_patch {

type 2

accuracy 0.01

<0,0,0>, <1,1,2>, ...

etc

}

If you want to use the rational bicubic_patch use "type 3" and in addition you must specify control points in this way <x,y,z,w>. "w" means weight of the control point...

bicubic_patch {

type 3

accuracy 0.01

<0,0,0,1>, <1,1,2,0.5>,...

etc

}

Well, it is more complicated :) This is a description of the bezier_patch in a manner of POV-Ray's manual

bezier_patch {

U_ORDER, V_ORDER

[accuracy ACCURACY_VALUE]

[rational]

<CP_1_1>, ..... <CP_1_U_ORDER>

. .

. .

<CP_V_ORDER_1>,.. <CP_V_ORDER_U_ORDER>

[trimmed_by { ... }]

}

U_ORDER, V_ORDER - number of control points in u (v) direction. Or order of patch in u (v) direction plus 1.

Note: These values must be 2, 3, or 4.

ACCURACY_VALUE - specifies how accurate the computation will be. 0.01 is a good value to start with. For higher precision you should specify smaller number.

Note: ACCURACY > 0 (This value must be greater than zero).

rational - If specified, a rational bezier patch (or trimming curve) will be created. In the case of a rational bezier patch, the control points must be four-dimensional vectors as described for the bicubic_patch.

CP_*_* - control points of the surface - <x,y,z> or <x,y,z,w> in case of rational surfaces.

trimmed_by - see below. You can use arbitrary number of this section in a bezier_patch.

There are two types of trimming shapes. Here the keyword "type" has NO relation to the method used to compute them as in bicubic_patches (I know, this could be little confusing).

Imagine that you have two rectangles:

The larger rectangle is a bezier patch and the smaller one is the trimming shape. "type" specifies which part will be visible.

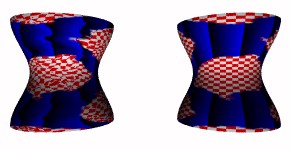

type 0 type 1

Blue = visible

Type 0 specifies that the area inside the trimming curve will be visible, while type 1 specifies that the area outside the trimming curve will be visible.

trimmed_by {

type TYPE

[ ORDER [rational] <CP_1>,... <CP_ORDER> ]

[ scale <u,v> ]

[ translate <u,v> ]

[ rotate ANGLE ]

}

Trimming shapes are closed curves, piecewise a bezier curve, a sequence of (rational) bezier curves.

ORDER - order of trimming curve plus 1

Note: This value must be 2, 3, or 4.

rational - specify if the curve is rational or if it is not

CP_* - control points of the trimming curve in the <u,v> coordinate system of trimmed bezier surface. Hence <0,0> is mapped to CP_0_0, <1,0> to CP_1_U_ORDER, <0,1> is equal to CP_V_ORDER_1 and <1,1> is in CP_V_ORDER_U_ORDER.

scale, translate, rotate - you can use these keywords to transform control points of already inserted curves of the current trimming shape. You can use an arbitrary number of curves and transformations in one trimmed_by section. To obtain the desired shape you can also freely mix them (See some examples with #while statement in test-trims/ directory).

If the first control point of a curve is different from the last control point of the previous one they will be automatically connected. The same applies for the last control point of the last curve and the first point of the first curve.

Note that if you use transformations (especially rotations) points will not be transformed exactly and an additional line will be inserted. To avoid this problem you may use the keyword 'previous' (for the current value of the last control point of the previous curve) or 'first' (first control point of the first curve). Since only the <u,v> position is copied, you have to write the comma and weight of this control point also.

Yes.

#declare identifier =

trimmed_by {